Are you frustrated about not being understood when talking to you smart home? Are you Norwegian? Despair no more.

This is how you can use the Norwegian language model trained by National Library of Norway Ai Labs on 20.000 hours of labeled sound material. You can find the model files on HuggingFace.

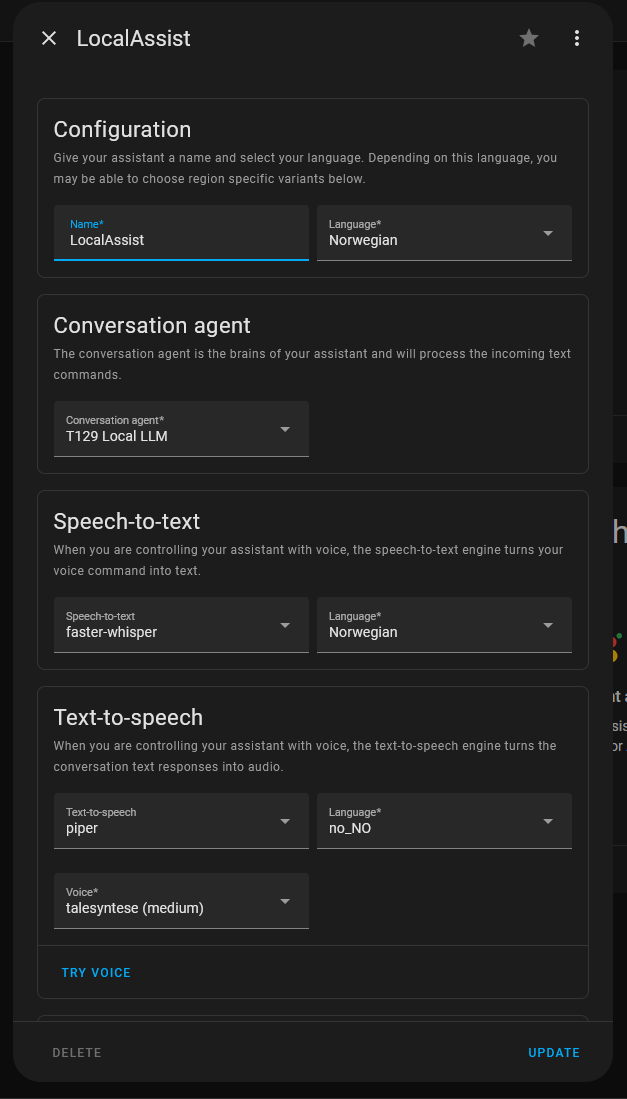

I've already written an article on how you get Whisper and Piper to work locally on a Proxmox server. From there, you just set up the Wyoming integrations in Home Assistant, pointing to your new Whisper and Piper server, on the ports 10300 and 10200, respectively. Now you can include Whisper and Piper in your local pipelines.

The rhasspy/wyoming-whisper docker-image is pretty awesome though, because it allows you to include other models than the ones mentioned in the docs. To get nicely trained Norwegian speech-to-text model working with the Wyoming integration, simply alter ONE LINE OF CODE in your docker-compose.yml file.

Instead of this:

command: --model medium-int8 --language no --beam-size 5 --device cuda

Do this:

command: --model NbAiLab/nb-whisper-medium --language no --beam-size 5 --device cuda

And run:

docker compose up -d

Now you need to wait a while after the first call to the model, because it needs to be downloaded and start up and such, but after a while, it starts responding perfectly.

So enjoy your Norwegian model.

Oh - and if you want to run ANY other whisper model from HuggingFace, just replace NbAiLab/nb-whisper-medium with whatever HuggingFace model id you deem useful for your specific use case. For example, if you are short on VRAM, maybe use the NbAiLab/nb-whisper-tiny variant, or find a specific model for your language.